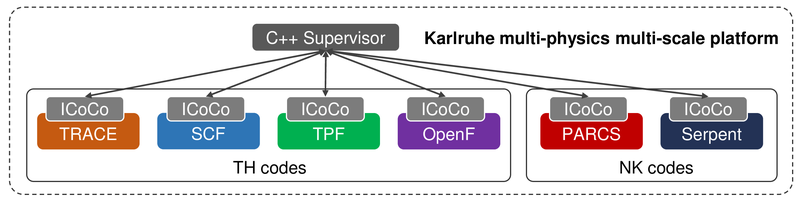

Overview

Modular Structure

The platform integrates a growing collection of in-house and third-party solvers, enabling the coupling of solvers with various physical models and spatial-temporal scales.

Each solver is treated as a module that can be flexibly selected and combined based on the simulation scenario, supporting both development and application of advanced analysis methodologies.

- Core Library (C++): A shared C++ core library provides essential functionalities such as mesh handling, parallel communication (MPI), interpolation, and standarized data I/O. This ensures efficient, scalable, and reusable components across all solvers and coupling modules, promoting consistency and performance throughout the platform

ICoCo Interface

The modules are coordinated by a C++-based supervisor through the ICoCo (Interface for Code Coupling) standard, providing a standardized API for initializing, advancing, and exchanging field data between solvers.

- Solver Adapters: Each solver is wrapped by a custom adapter module that translates its domain-specific logic into the ICoCo-standard interface. These adapters expose solver inputs/outputs in a unified format, enabling seamless coupling while maintaining solver independence from the coupling logic. This promotes extensibility, interoperability, and decoupling between physics models and numerical solvers.

Mesh and Field Exchange

The exchange of physical quantities between solvers is based on explicit mesh descriptions. Solvers provide or consume fields (eg, temperature, power density) that are interpolated or projected across meshes using the MEDCoupling library.

This mesh-based communication allows domain decomposition coupling strategies (eg, core-to-core, vessel-to-vessel), as well as domain-overlapping schemes (eg, subchannel-to-CFD, porous-to-1D), ensuring spatial consistency across physical domains.

HPC Compatibilty

For large-scale simulations, KAMPS is fully compatible with HPC clusters and supercomputers:

- Parallel execution via MPI and OpenMP

- SLURM/PBS batch script generation

- Scalable from desktop workstations to Tier-1 infrastructures

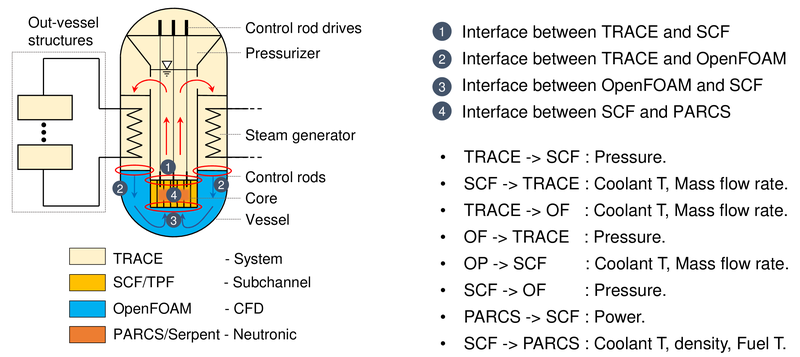

Coupling

Current Verified Coupling Pairs

While the modularity allows any solver to be coupled with any other in theory, coupling is currently limited to verified solver pairs:

- TRACE / SCF

- TRACE / OpenFOAM

- PARCS / SCF / TPF

- SCF / OpenFOAM

- TRACE / Serpent2

- Others in development

Coupling Flexibility & Limitations

Current limitations arise from practical constraints such as:

- Incompatible mesh structures

- Solver-specific assumptions

- Lack of standardized interfaces

Extension to new solver combinations requires additional development and validation

Key Coupling Strategies

Loose (Operator-Splitting) Coupling

Each solver advances independently per timestep, and data is exchanged at synchronization points.

- Suitable for weakly coupled systems (e.g., TRACE ↔ PARCS)

Strong (Iterative) Coupling

Solvers iterate within each timestep until convergence is achieved on shared fields.

- Necessary for tightly coupled processes (e.g., subchannel ↔ CFD flow feedback)

Hierarchical Multi-scale Coupling

Combines models with different resolution levels, such as using subchannel analysis (SCF) to feed boundary conditions to detailed CFD simulations (OpenFOAM).

- Using porous media simulations (TPF) to inform core-wide flow behavior

Future Enhancements

- Integration of KANECS, the KIT in-house core simulator, for higher-fidelity neutronics coupling

- Coupling with ATHLET, a licensed thermal-hydraulics system code widely used in industry

- Automated interface generation and mesh mapping routines

- Improved user interface for coupling workflow design via SALOME GUI